I borrowed this book from the library, not entirely sure what I was in for, but feeling the need to get the low down on 'digital liberties' at the very least. So out it was loaned, and I finally got round to reading it.

The Revolution will be Digitised: Dispatches from the Information War (the official website for the book can be viewed here) is written by Heather Brooke, the same journalist who helped expose the story of the politicians' expenses claims in 2009, and sparked a huge scandal in the UK. This is her third book, and charts the progression of the Cablegate affair, through her own eyes, and those of the hackers, journalists and politicians involved - most notably her dealings with Julian Assange and other members of WikiLeaks. The narrative is interspersed with discussions on the nature of the digital information age and what Brooke terms the 'information war' - the conflict between freedom of speech and its stifling in the name of national security.

I had mixed feelings towards this book. That may be partly because activism tends to make me rather uneasy. That doesn't mean that I don't question my betters or the world around me. It just means that I'm just one of those people who prefers to just get on with their lives, and to have the right to be 'let alone' (as Brooke quotes via Louis Brandeis in her book). And consequently, I appreciate people like Brooke, whose mission in life is to give people like me the right to be 'let alone', and to have the power to question those betters should we feel the need to do so.

However, I wasn't too keen on the way the Cablegate sections were presented as a story-like narrative. I can see the effect Brooke was going for, setting a suspenseful background for the unfolding events, and making the characters more 'human'; but to me, that was its flaw - it gave her book the edge of a thriller or conspiracy theory that I really felt detracted from what she had to say, and from the point of her story. To her credit, however, she is even-handed and realistic in her portrayal of the people involved - particularly Assange, who has since become some of a cause célèbre in the information world. On that score, Brooke cannot be faulted.

I found her discourses on the 'information war' itself much more interesting. These centred on the difficulty in policing the internet, and how various governments are scrambling to set up some form of internet censorship in order to help preserve the status quo. I think many people will find it intriguing, if not disturbing, to find out just how much of the internet is censored right here in good old Blighty. Digitisation makes it far more easy to communicate and to exercise free speech, but it also makes it easier for information to just suddenly disappear. Just hit the delete key and it's gone. Whilst we may be on the cusp of an 'information democracy', we may just as much be facing an 'information totalitarian state'. Brooke's point is that we're teetering on the precipice of both, and what happens in the next few years could choose which hole we fall down.

At least one question was answered for me in this book. I had always wondered why it was that while the Internet Archive plugs away capturing and archiving the web willy-nilly, why is it that the UK Web Archive still has to go through the costly and time-intensive rigmarole of seeking a webmaster's permission before they can capture it? The answer is in our different constitutions. In the US the First Amendment prevents the government from copyrighting official information. Here in the UK we have Crown Copyright, a proprietary system, meaning that the government can copyright a publication and thus suppress it. We have to ask permission to use public data. And that's probably the reason we're not allowed to use certain data sets for mashups in the Data Visualisation module.

But then, what do I know?

Blog for my creative musings

Wednesday, 28 March 2012

Wednesday, 21 March 2012

From the Shelf: Lost Languages by Andrew Robinson

I happen to have a thing about historical mysteries, and I also happen to have a thing about the history of writing. These two interests of mine seem to have come together admirably in Andrew Robinson's Lost Languages: The Enigma of the World's Undeciphered Scripts.

'Lost Languages' is a bit of a misnomer in this case. 'Lost Writing Systems' would've been a more accurate if less poetic title. While lost languages often walk hand in hand with lost writing systems, that isn't always expressly the case, as Robinson makes clear. For example, the famous case of the decipherment of Linear B showed that the Linear B tablets recorded a very archaic form of Greek. Likewise, ancient Egyptian hieroglyphs expressed a form of archaic Egyptian quite similar to Coptic, which was still a living (if only strictly liturgical) language. This isn't a book about the lost languages, it's a book about lost writing systems (in the sense that their meaning is largely lost to us), and about attempts at decipherment. What makes this book fascinating is how culture often seems to inform the decipherment of scripts. As such, Robinson throws in a lot about the history of each culture, as well as the technical details of decipherment. Robinson gives us a taste of the history of writing, bringing to life a human need that has spanned the ages.

This need is the need to organise, record and remember, and it is the single most fascinating thing about this book. People say we now live in an 'Information Society'; but I believe that we always have, just in ways that have been limited by the available tools and infrastructure around us. This desire to order and make sense of our world has led to the invention of many hundreds of different scripts, of varying degrees of complexity and efficiency (Robinson highlights the fact that Japanese is the most inefficient writing system in the world, yet Japan has one of the world's highest levels of literacy). Throughout time, human ingenuity has been funnelled into the activity of writing and recording, even if it means re-inventing the wheel again and again.

The most intriguing example of this is the rongorongo script of Easter Island, a relatively young script which died possibly within the space of 90 years. No one knows exactly when it was invented or when it died out, what it was used for or what any of the beautifully cartoon-like characters mean. But somehow, for some reason, someone on this isolated little island in the middle of nowhere felt the need to invent a complex script that flourished for a short time before its flame suddenly and dramatically died out with the coming of the slave trade and disease from the West. And yet this writing system, constructed about the 18th-19th century, shares striking similarities with a totally unrelated system (The Indus Valley Script), which died out nearly 3 millennia before.

What I found most enlightening about Robinson's book is the fact that nearly all the scripts he highlights were created not in order to create but to record. There are no great epics or literary works to be found amongst the earliest records. Nor are there mythologies, histories, or philosophies. Most of the earliest writings deal with records, itineraries, calendars, and lists of people - slaves or kings. What drove people to invent writing? It was a need to record, enumerate and catalogue information. To order our world, even to the most mundane degree. That is what those first pioneers, the inventors of writing, bequeathed to us. Their's was an information society as much as ours was, and Lost Languages gives an intriguing insight into the lost information societies of yesteryear.

'Lost Languages' is a bit of a misnomer in this case. 'Lost Writing Systems' would've been a more accurate if less poetic title. While lost languages often walk hand in hand with lost writing systems, that isn't always expressly the case, as Robinson makes clear. For example, the famous case of the decipherment of Linear B showed that the Linear B tablets recorded a very archaic form of Greek. Likewise, ancient Egyptian hieroglyphs expressed a form of archaic Egyptian quite similar to Coptic, which was still a living (if only strictly liturgical) language. This isn't a book about the lost languages, it's a book about lost writing systems (in the sense that their meaning is largely lost to us), and about attempts at decipherment. What makes this book fascinating is how culture often seems to inform the decipherment of scripts. As such, Robinson throws in a lot about the history of each culture, as well as the technical details of decipherment. Robinson gives us a taste of the history of writing, bringing to life a human need that has spanned the ages.

This need is the need to organise, record and remember, and it is the single most fascinating thing about this book. People say we now live in an 'Information Society'; but I believe that we always have, just in ways that have been limited by the available tools and infrastructure around us. This desire to order and make sense of our world has led to the invention of many hundreds of different scripts, of varying degrees of complexity and efficiency (Robinson highlights the fact that Japanese is the most inefficient writing system in the world, yet Japan has one of the world's highest levels of literacy). Throughout time, human ingenuity has been funnelled into the activity of writing and recording, even if it means re-inventing the wheel again and again.

|

| The mysterious rongorongo script. |

|

| An Indus Valley seal. |

Saturday, 25 February 2012

Web Archiving + Videogame Archiving = Digital Preservation?

| The Atari 2600. The first games console I ever got my grubby mitts on. |

And something about this really bothers me.

I mean, I totally get the need for priorities here. The Web certainly has a lot more useful information (e.g. 'oral histories', online election campaigns, government portals etc.) that are useful resources and should be preserved for the future generations. And videogames... well that's just what anti-social, nerdy guys stuck in their basements/bedrooms half their lives deal with right?

I think there's something of a perception that videogames lead to brain rot in our young. Videogames have had so much bad press over the years, to talk about them in any serious forum is a bit of an anathema. Why would anyone want to preserve anything that turns kids into zombies at best, killers at worst?

The fact is, videogames are an important part of our cultural heritage. From the Lewis Chessmen to the Monopoly board, games have been an integral part of cultural life - why should videogames be treated any different?

I suspect that the aversion is not simply because of the bad connotations computer gaming conjures up in the minds of many; it's also the fact that preserving games is as difficult as preserving the web is. Games are not called 'ephemera' for nothing.

There are two main ways of preserving digital content - migration and emulation. Migration involves the conversion of files to formats supported by existing technologies, whilst emulation involves the recreation of obsolete technologies on existing computer platforms. Now both exist in the world of videogaming. Consider the most famous old games - games like PacMan and Tetris. These games will always be 'ported' to new formats and consoles because they are so hugely popular on so many levels. But what about games like 'The Perils of Rosella'? And 'Everyone's a Wally'? Such obscure titles are left to bite the dust because no one cares about them, and/or the hardware to actually play them died a couple of decades ago. Now some hard-working peeps (or geeks, as some might call them) create emulators in order to play them again on a currently existing platform. They even helpfully upload them to the web so others can play them. Is that an archive there? Yes, I think it is!

Having said all that, there is growing interest in the preservation of videogames, even if progress in the area has been slow and patchy. The National Media Museum is now housing the National Videogame Archive, which as far as I can tell collects retro consoles and gaming hardware. And the British Library is now starting its own videogame website archiving project, which aims not only to collect online gaming archives, but related gaming websites such as online walkthroughs, fanart, fanfic, and so on. There is however a limitation in what the British Library can do, as permission has to be sought from rightholders before archiving can take place, a serious shortcoming when it comes to archiving actual gaming content. Nevertheless, it's a step in the right direction, and I hope that fellow archivists across the pond and elsewhere (where licensing laws are more conducive to mass web archiving) are starting out on the same path too.

Sunday, 19 February 2012

From the Shelf: The Longer Long Tail by Chris Anderson

I picked up this book randomly in Waterstones the other day, trying to use up the points on the gift card my work colleagues had thoughtfully bought me as a leaving present. I remembered hearing about the 'Long Tail' on the LISF module, and figured it would be relevant reading.

The idea of the Long Tail isn't new, and is probably old hat to most readers. This book is an updated version of Chris Anderson's 2006 original, but to be honest, I don't think there was much added between the two editions. Most of the information was pertinent to pre-2006, and this edition happens to be 2009, so it's still a little out of date. For instance - no mention of Twitter, Spotify, and other newer social media. Not even last.fm, come to think of it. The thing is, you don't really need to have those sites mentioned. Anderson gives you all the detail, and you just run with it. Really, you could read 'Twitter' for 'Facebook' and you'd still get the same idea. The theory of the Long Tail would still hold true.

And really, that's the only beef I have with this book. Much as I enjoyed it (and I tell you true, I did), most of it was repetition. After the first couple of chapters, you'd pretty much got the point and were ready to move on. There is a Long Tail for just about anything you can think of - music, movies, coffee, chocolate, beer, clothes, prams, cars, advertising. After finishing this book, you will be wondering what ISN'T affected by the Long Tail and finding yourself hard-pressed to find anything.

The thing is, once you know it, it's obvious. We all have niche interests. There's nothing amazing about that. It's just that, with the internet, we have been spoiled by the expansion of opportunities to indulge in those interests. Perhaps for the first time in history, people have had wide access to niche markets open up before them at the click of a mouse. In the past traditional 'bricks and mortar' stores have only concentrated on the hits and the bestsellers because they had no space for the rare and the obscure, and the hits were sure to sell and make a profit. Simple math. Anderson's point is that this paradigm is dying - because of the World Wide Web. Now the niche market constitutes a growing proportion of a company's overall profits - and it's continuing to grow. If you have enough buyers in any one niche market, you are still going to make a hell of a lot of money, even if only one unit is sold per annum.

(Incidentally, this is one of the things I enjoyed most about being in Japan, specifically Tokyo. Stores there heavily cater to Long Tail markets. Yellow Submarine serves the long tail of roleplay gaming; Mandarake serves the long tail of self-published fan comics; Book Off serves the long tail of second hand books, CDs, DVD's and videogames).

Some people have criticised Anderson for not getting the economics right in this book; I don't know much about economics, but I know enough from what being a Long Tail consumer myself informs me. There is no way I could've bought VS System cards a year after they'd been discontinued without access to specialist online stores such as the 13th Floor. Neither could I have found the hard-to-find beads and findings I was looking for unless I went to great expense to get them specially shipped - or bought them cheaply and conveniently at The Bead Shop. A whole new world has opened up for me and my interests through the internet; as has a whole new market been born for many businesses out there in the 'real world'.

So yeah. It's worth a read. Even if only to get your brain ticking over all the Long Tails it can possibly conceive of.

The idea of the Long Tail isn't new, and is probably old hat to most readers. This book is an updated version of Chris Anderson's 2006 original, but to be honest, I don't think there was much added between the two editions. Most of the information was pertinent to pre-2006, and this edition happens to be 2009, so it's still a little out of date. For instance - no mention of Twitter, Spotify, and other newer social media. Not even last.fm, come to think of it. The thing is, you don't really need to have those sites mentioned. Anderson gives you all the detail, and you just run with it. Really, you could read 'Twitter' for 'Facebook' and you'd still get the same idea. The theory of the Long Tail would still hold true.

And really, that's the only beef I have with this book. Much as I enjoyed it (and I tell you true, I did), most of it was repetition. After the first couple of chapters, you'd pretty much got the point and were ready to move on. There is a Long Tail for just about anything you can think of - music, movies, coffee, chocolate, beer, clothes, prams, cars, advertising. After finishing this book, you will be wondering what ISN'T affected by the Long Tail and finding yourself hard-pressed to find anything.

The thing is, once you know it, it's obvious. We all have niche interests. There's nothing amazing about that. It's just that, with the internet, we have been spoiled by the expansion of opportunities to indulge in those interests. Perhaps for the first time in history, people have had wide access to niche markets open up before them at the click of a mouse. In the past traditional 'bricks and mortar' stores have only concentrated on the hits and the bestsellers because they had no space for the rare and the obscure, and the hits were sure to sell and make a profit. Simple math. Anderson's point is that this paradigm is dying - because of the World Wide Web. Now the niche market constitutes a growing proportion of a company's overall profits - and it's continuing to grow. If you have enough buyers in any one niche market, you are still going to make a hell of a lot of money, even if only one unit is sold per annum.

(Incidentally, this is one of the things I enjoyed most about being in Japan, specifically Tokyo. Stores there heavily cater to Long Tail markets. Yellow Submarine serves the long tail of roleplay gaming; Mandarake serves the long tail of self-published fan comics; Book Off serves the long tail of second hand books, CDs, DVD's and videogames).

Some people have criticised Anderson for not getting the economics right in this book; I don't know much about economics, but I know enough from what being a Long Tail consumer myself informs me. There is no way I could've bought VS System cards a year after they'd been discontinued without access to specialist online stores such as the 13th Floor. Neither could I have found the hard-to-find beads and findings I was looking for unless I went to great expense to get them specially shipped - or bought them cheaply and conveniently at The Bead Shop. A whole new world has opened up for me and my interests through the internet; as has a whole new market been born for many businesses out there in the 'real world'.

So yeah. It's worth a read. Even if only to get your brain ticking over all the Long Tails it can possibly conceive of.

Thursday, 29 December 2011

Web 2.0 and Web 3.0 – A Gamer’s Perspective

Introduction

With the advent of faster internet connections, online gaming has grown in popularity; likewise, the emergence of Web 2.0 has given birth to the world of social networking. But these two entities are not always entirely exclusive of one another. Along with Facebook have also grown a plethora of social network games, such as Farmville and Mob Wars, where participants can interact with one another as well as play the game itself.

Similar games such as IMVU and Second Life include the option for members to create custom content (CC), which adds an extra dimension of interactivity for participants, allowing them to trade, exchange, and even buy and sell custom creations via the internet. This essay will discuss the ways in which Web 2.0 and 3.0 have enabled users to create and exchange such content, as well as swap tutorials, discuss game information, and find an audience. Specifically the essay will look at The Sims (2000), an old game by Maxis (now a subsidiary of Electronic Arts) which, despite two newer sequels, still retains a large following. Many sites related to this game are now obsolete, motivating fans to preserve and archive custom content which can no longer be found elsewhere. Three points will be discussed: - 1) how gamers have used Web 2.0 and 3.0 tools to effectively create, archive and publish The Sims custom content on the web; 2) how they use current advances in ICTs to create ‘information networks’ with other fans and gamers and; 3) how the archiving of custom content has been digitally organised and represented on the web. Lastly, we will see how present technologies can be further used to enhance gamers' experiences in the future.

1. Creating and Publishing Custom Content (CC)

1.1. XML and the creation of custom content for The Sims

Objects in the game The Sims are stored in the IFF (Interchange File Format) file format, a standard file format which was developed by Electronic Arts in 1985 (Seebach, 2006). Over the years, gamers and fans have discovered ways to manipulate IFF files, and thus clone and customise original Sims objects. This has been done with the tacit endorsement of the game creator, Will Wright (Becker, 2001), and has been supported by the creation of various open-source authoring and editing tools such as The Sims Transmogrifier and Iff Pencil 2.

The Sims Transmogrifier enables users to ‘unzip’ IFF object files, exporting the sprites into BMP format, and converting the object’s metadata into XML format (see Fig. 1). XML (eXtensible Markup Language) is a standard ‘metalanguage’ format which “now plays an increasingly important role in the exchange of a wide variety of data on the web” (Chowdhury and Chowdhury, 2007, p.162). Evidence of its versatility is that it can be used to edit the properties of Sims objects, either manually or via a dedicated program. The game application then processes the data by reading the tags in context.

|

| Fig. 1 - An example of a Sims object's metadata expressed in XML. The tags can be edited to change various properties of the object, such as title, price and interactions. |

This highlights two points relating to the general use of XML:- a) it is versatile enough to be used to edit the properties of game assets and; b) it is accessible enough for gamers to effectively edit it in order to create new game assets.

1.2. Pay vs. Open-Source

The pay vs. open-source debate has not left The Sims world unscathed. In fact, it is a contentious issue that has divided the community for years. CC thrives on open-source software; indeed, cloning proprietary Maxis objects in order to create new ones would not be possible without free programs such as Transmogrifier. These tools have supported the growth of an entire creative community that modifies game content. However, there are software tools that are not open-source and which are often met with disapproval by gamers. Some CC archive sites, such as CTO Sims, have opted to make these programs available to its members for free, which has been controversial for some.

Even more contentious is the selling of CC, which has been made possible with the use of APIs (see Fig. 2). Many players feel that they should not have to pay for items which have, after all, merely been cloned from Maxis originals. Some creators choose to sit on the fence, putting Paypal donation buttons on their sites for those who wish to contribute to web-hosting costs. It seems that such a debate would not exist without the existence of online transaction web services like Paypal, which seem to have fed a controversial market in CC. On the other hand it is largely the open source 'movement' which has allowed creators to make their content in the first place, and so the latest web technologies have essentially fuelled both sides of a debate which looks set to continue.

Even more contentious is the selling of CC, which has been made possible with the use of APIs (see Fig. 2). Many players feel that they should not have to pay for items which have, after all, merely been cloned from Maxis originals. Some creators choose to sit on the fence, putting Paypal donation buttons on their sites for those who wish to contribute to web-hosting costs. It seems that such a debate would not exist without the existence of online transaction web services like Paypal, which seem to have fed a controversial market in CC. On the other hand it is largely the open source 'movement' which has allowed creators to make their content in the first place, and so the latest web technologies have essentially fuelled both sides of a debate which looks set to continue.

|

| Fig. 2 - A Paypal API added to the site Around the Sims. The donation button allows payments to be made directly through Payal's site. Registration is not necessary. |

1.3. Publishing CC on the Web

There are several methods through which creators publish their work. One is blogging. Fig 3 shows an example of a creator's blog, Olena's Clutter Factory, which showcases her CC and allows gamers to download them. The Clutter Factory is a very successful site – it not only provides high quality downloads, but also a level of interactivity not often seen with The Sims fan sites. Because it is hosted by Blogger, it allows fellow Google members to follow updates as new creations are added, post comments, and browse via tags.

|

| Fig 3. - A blog entry at Olena's Clutter Factory |

Another example of effective publishing is the author's website, Sims of the World, which is hosted by Google Sites (see Appendix 1). Google Sites allows the webmaster to use a variety of tools in order to publish data on the web. This includes the ability to create mashups using what Google calls 'gadgets'.

Google gadgets are essentially APIs, “miniature objects made by Google users … that offer … dynamic content that can be placed on any page on the web” (Google, 2011). Examples of Google gadgets are the Picasa Slideshow and the +1 facility. Sims of the World uses Picasa Slideshow to display the site owner's online portfolio to fellow gamers in a sidebar. Gamers are therefore able to view examples of the creator's work before choosing to download it. If they like what they see, they have the option to use the +1 button, which effectively recommends the site to other gamers by bumping up its rating on Google's search engine.

Google Sites also allows the addition of outside APIs. Sims of the World has made use of this facility by embedding the code from a site called Revolver Maps, which maps visitors to the site in real time. Not only can the webmaster view previous, static visits to the site, they can also view present visitors to the site via a 'beacon' on the map. This tool allows the ability to view visitor demographics and the popularity of the site. For a more in-depth view, the site's visits may be mapped via Google Analytics, which provides more detailed information.

Google itself is an excellent tool for web publishers as it accommodates editing whilst on the move. The Google Chrome browser now comes with a handy sync function, which stores account information in the 'cloud', and allows it to be accessed wherever the webmaster happens to be. Browser information such as passwords, bookmarks and history are stored remotely, which makes accessing the Google Sites manager quick and convenient, with all relevant data available immediately. This is an invaluable tool for webmasters, as well as for the regular internet surfer.

2. Social Networking and The Sims

"Participation requires a balance of give (creating content) and take (consuming content)."

(Rosenfeld and Morville, 2002, p.415).

The customisation of game assets from The Sims has opened up a huge online community which shares, exchanges and even sells CC. The kudos attached to the creation of CC means that creators often seek to actively display and showcase their work on personal websites, blogs and Yahoo groups. This has created a kind of social networking where creators exchange links to their websites, comment on one another’s works, and display their virtual portfolio. As Tomlin (2009, p.19) notes: “The currency of social

networks is your social capital, and some people clearly have more social

capital than others.”

How

then do creators promote their social capital?

Gamers have been quick to use social networking in order to showcase creations or keep the wider community informed. An example is the Saving the Sims Facebook page, which has a dedicated list of CC updates, as well as relevant community news. Other examples include Deestar's blog, Free Sims Finds, which also displays pictures of the latest custom content for The Sims, Sims 2 and Sims 3.

Forums have also become the lifeblood of The Sims gaming community and its “participation economy” (Morville and Rosenfeld, 2002). These include fora such as The Sims Resource, CTO Sims and Simblesse Oblige, which give gamers the opportunity to share hints, tips, and other game information as well as their own creations. It also offers the chance to create relationships with other gamers and fans. Themed challenges and contests are a popular past-time at these fora, with prizes and participation gifts given out regularly. Threads are categorised by subject, can be subscribed to, and at CTO can even be downloaded as PDFs for quick, offline reading.

Simblesse Oblige is a forum that specialises in gaming tutorials. Unlike webpage-based tutorials, Simblesse Oblige's tutorials have the advantage of being constantly updated by contributors (similar to the articles on Wikipedia). Forum members may make suggestions in the tutorial thread, add new information to the tutorials, or even query the author. Members are welcome to add their own tutorials (with the option of uploading explanatory images) to the forum and share their own knowledge with peers. This constantly changing, community-driven forum is a far cry from the largely one-dimensional, monolithic Usenet lists of Web 1.0.

3. Digital Organisation of The Sims CC

3.1. Archiving old CC

The Sims is an old game, and over the years many CC sites have disappeared. In recent years The Sims has seen a resurgence in popularity, and consequently there has been a move to rescue and preserve such content via sites such as CTO Sims, Saving the Sims, or Sims Cave. Unfortunately, this 'rescue mission' has been a slap-dash, knee-jerk reaction to the sudden disappearance of so much ephemera - items that no one thought they would need. Much has been done to save the contents of entire websites via the Internet Archive's Wayback Machine, for instance, but this cannot guarantee that any one website has been scraped in its entirety.

Sims collectors or archivists have resorted to community donations to help save what has been lost. This is a patchy process, as gamers generally do not collate custom content in an organised fashion, sometimes not knowing where their 'donations' come from or what exactly they consist of. Thus, archiving custom content is a time-consuming business which involves determining the nature of each file, labelling it, determining its properties and provenance and publishing it under the correct category (e.g. type of custom content, original creator/website etc.).

3.2. The Future of CC Archiving?

CTO Sims is a site - part archive, part forum - which attempts to rescue custom content from dead sites and publish it for the use of the community, categorising each item as closely to the original host site as possible. However, this is often impossible, as some sites are no longer accessible even via the Internet Archive. Since additions to the archive are made on a largely ad hoc basis (as and when donations are uploaded by the community), categorising is often inefficient, and duplications often occur, since there is no catalogue. The number of files is ever-expanding, creating an increasing need for a cataloguing or indexing system of some kind.

With the use of XML, RDF (Resource Description Framework) and other indexing tools, this may be the perfect time for such a system to be developed by the community. It is hoped that present and future technologies will aid in the further preservation of these niche ephemeral items, which display a unique side of online pop-culture. (See Appendix 2 for an example of a proposed RDF schema).

Blog URL:- http://digisqueeb.blogspot.com

References and Bibliography

Becker,

D., 2001. Newsmaker: The Secret Behind ‘The Sims’. CNET News,16 March 2001.

Available at: http://news.cnet.com/The-secret-behind-The-Sims/2008-1082_3-254218.html

[Accessed on 28 December 2011].

Brophy, P., 2007. The Library in the Twenty-First Century. 2nd ed. London: Facet.

Chowdhury, G. G. and Chowdhury, S.,

2007. Organizing Information: from the shelf to the web. London: Facet.

Feather, J. and Sturges, R. P. eds., 2003. International Encyclopedia of

Information and Library Science. 2nd ed. London: Routledge.

Google, 2011. Gadgets.

[online] Available at: http://www.google.com/webmasters/gadgets/

[Accessed on 28 December 2011].

Rosenfeld, L. and Morville, P.,

2002. Information Architecture for the World Wide Web. 2nd

ed. California: O’Reilly.

Seebach, P., 2006. Standards and specs:

The Interchange File Format (IFF). 13 June 2006. Available at: http://www.ibm.com/developerworks/power/library/pa-spec16/?ca=dgr-lnxw07IFF

[Accessed on 27 December 2011].

Sihvonen,

T., 2011. Players Unleashed! Modding The

Sims and the Culture of Gaming.

Amsterdam: Amsterdam University Press.

Tomlin,

I., 2009. Cloud Coffee House: The birth

of cloud social networking and death of the old world corporation. Cirencester: Management Books 2000.

Appendix 1

An example of a proposed RDF schema applied to CC for The Sims, composed by the author.

This picture shows a skin for The Sims. The skin file (.skn, .cmx and .bmp) would be the subject. ‘The Seamstress’ would be the object. The predicate would be ‘has title’ (http://purl.org/dc/elements/1.1/title).

Here are examples of other RDF triples that can be applied to this item:-

Subject: this item

Object: skin file

Predicate: Type (http://purl.org/dc/elements/1.1/type)

Subject: this item

Object: Ludi_Ling

Predicate: Creator (http://purl.org/dc/elements/1.1/creator)

Subject: this item

Object: 11/08/2011

Predicate: Date (http://purl.org/dc/elements/1.1/date)

Subject: this item

Object: birthday present for JM

Predicate: Description (http://purl.org/dc/elements/1.1/description)

Subject: this item

Object: rar.

Predicate: Format (http://purl.org/dc/elements/1.1/format)

Subject: this item

Object: Member Creations/Skins

Predicate: Category

Sunday, 4 December 2011

The World of Open

One thing I'm all for is the world of open on the web. I can't be bothered with registering, subscribing and paying. Surely the whole point of the net is to have everything at your fingertips? Who wants to jump through all those hoops?

I'm a big fan of FileHippo. Many of the programs I've downloaded are open source. I'm not crazy about the whole open source movement (I take a practical approach rather than an ideological one), but I fully support anything to do with open source and open data. With freedom of information, it makes it a whole lot more practical to make everything available online instead of spending hundreds of man hours working through request after request. Not to mention the advantages of creating RDF schemas for such data and creating huge mashups of information.

On a personal level, open source comes a lot into my everyday life creating for the Sims. There is a big divide in the community about being 'fileshare friendly', or choosing to withhold or charge for the content you have created. This goes not only for custom items, skins and objects, but also for custom programs made to get the most out of the game. It is a very contentious issue, and still divides the community after many years. It seems that open source is something that people feel strongly about on both sides of the divide.

Sharing my creations freely is, for me, being part of an online community to get the best out of your game. Also, the ability to push the boundaries with your creations and 'show off' what you can achieve is also a big motivation. It is also a great way for creators and users to share their ideas and needs, to look outside the box and to create things from a whole new viewpoint. Creators create in response to other player's needs as well as their own. This makes the community atmosphere very productive, stimulating and open-minded. And more often than not, people are passionate enough about a service to donate in order to cover costs, so it works both ways.

I'm a big fan of FileHippo. Many of the programs I've downloaded are open source. I'm not crazy about the whole open source movement (I take a practical approach rather than an ideological one), but I fully support anything to do with open source and open data. With freedom of information, it makes it a whole lot more practical to make everything available online instead of spending hundreds of man hours working through request after request. Not to mention the advantages of creating RDF schemas for such data and creating huge mashups of information.

On a personal level, open source comes a lot into my everyday life creating for the Sims. There is a big divide in the community about being 'fileshare friendly', or choosing to withhold or charge for the content you have created. This goes not only for custom items, skins and objects, but also for custom programs made to get the most out of the game. It is a very contentious issue, and still divides the community after many years. It seems that open source is something that people feel strongly about on both sides of the divide.

Sharing my creations freely is, for me, being part of an online community to get the best out of your game. Also, the ability to push the boundaries with your creations and 'show off' what you can achieve is also a big motivation. It is also a great way for creators and users to share their ideas and needs, to look outside the box and to create things from a whole new viewpoint. Creators create in response to other player's needs as well as their own. This makes the community atmosphere very productive, stimulating and open-minded. And more often than not, people are passionate enough about a service to donate in order to cover costs, so it works both ways.

Friday, 25 November 2011

The journey into Web 3.0....

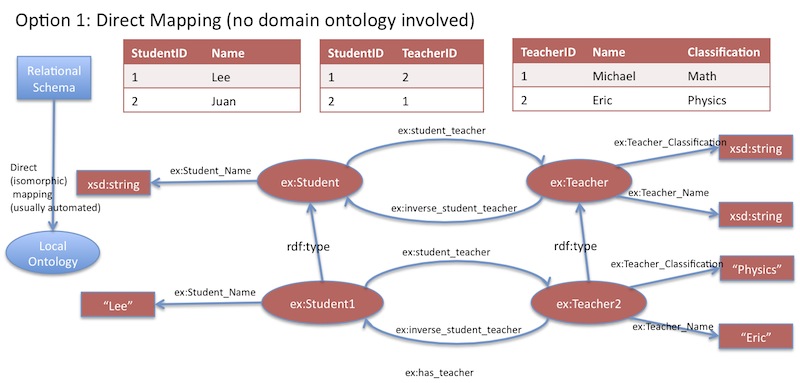

I'd always read of the semantic web as Web 2.0. So it was a surprise to come across the concept of Web 3.0. Actually, the term 'semantic web' has been bandied around for a long time. What it actually is is up for debate. The easiest way to think about it is in terms of Web 1.0 = read only, Web 2.0 = read/write, and Web3.0 = read/write/execute.

What I take from the concept of Web 3.0 is the idea of making machines smarter, more 'intuitive'. Of course, since computers are inherently stupid, this involves us giving the means with which to become smarter and more intuitive. Like giving them the semantics to work with, in order to retrieve data more effectively. In short, data is not only machine readable, but machine understandable.

This is all technically theory, since the semantic web doesn't really exist - yet. We still don't really have a system whereby computers can build programmes, websites, mashups, what have you, in an intelligent and 'intuitive' way according to our needs. But the potential is there, with tools like XML and RDF. These involve the creation of RDF triples, taxonomies, and ontologies. What the hell, you may ask? And I may well agree with you. How I've come to understand it is that these are essentially metadata applied to information in order to relate those pieces of information together in a semantic way. These relationships between data or pieces of information make it easier to retrieve them, because they are now given a context within a larger whole.

This does have its parallels with Web 1.0. RDF Schemas (taxonomies expressed as groups of RDF triples) have a certain similarity to the concept of relational databases. RDF schema 'nodes' can be equated to the primary key in relational databases. In fact, the whole idea of RDF schemas can get a little confusing, so some of us found it easier to think of it in terms of a web-based relational database.

The idea behind this is essentially to create relational databases on the net, but to link them semantically to one another, effectively creating the ability to find correlations between vast amounts of data that would otherwise be remote and essentially invisible. The advantage is that we can get the accuracy of data retrieval results on the Web. To take the diagram's example; we have a database of student's GCSE results. If we pair this up with a demographic database, and apply an ontology to them (thereby creating rules of inference), we can discover correlations between GCSE results and student demographics.

The potential to make even more advanced and relevant mashups is the huge advantage of this technology. However, the problem is that it requires quite a bit of skill and time investment in order to create these schemas, taxonomies and ontologies. Most people would be satisfied with what they can create via Web 2.0 technologies - with social networking, blogging and mashups. The potential to make faulty schemas is also a problem - as with a database, any error in organising the information, or in the data retrieval process will mean a complete inability to access data.

But the main problem I see is that these schemas are only really applicable to certain domains, such as librarianship, archiving, biomedics, statistics, and other similar fields. For the regular web user, there are no particular benefits to applying an RDF schema to, say, your Facebook page - but it would probably be useful to Mark Zuckerberg.

The semantic web is probably more useful in the organisation of information on the web, and harnessing it. But it would probably be largely invisible and irrelevant to the legions of casual web users out there.

What I take from the concept of Web 3.0 is the idea of making machines smarter, more 'intuitive'. Of course, since computers are inherently stupid, this involves us giving the means with which to become smarter and more intuitive. Like giving them the semantics to work with, in order to retrieve data more effectively. In short, data is not only machine readable, but machine understandable.

This is all technically theory, since the semantic web doesn't really exist - yet. We still don't really have a system whereby computers can build programmes, websites, mashups, what have you, in an intelligent and 'intuitive' way according to our needs. But the potential is there, with tools like XML and RDF. These involve the creation of RDF triples, taxonomies, and ontologies. What the hell, you may ask? And I may well agree with you. How I've come to understand it is that these are essentially metadata applied to information in order to relate those pieces of information together in a semantic way. These relationships between data or pieces of information make it easier to retrieve them, because they are now given a context within a larger whole.

This does have its parallels with Web 1.0. RDF Schemas (taxonomies expressed as groups of RDF triples) have a certain similarity to the concept of relational databases. RDF schema 'nodes' can be equated to the primary key in relational databases. In fact, the whole idea of RDF schemas can get a little confusing, so some of us found it easier to think of it in terms of a web-based relational database.

|

| Relational database <-> RDF schema |

The potential to make even more advanced and relevant mashups is the huge advantage of this technology. However, the problem is that it requires quite a bit of skill and time investment in order to create these schemas, taxonomies and ontologies. Most people would be satisfied with what they can create via Web 2.0 technologies - with social networking, blogging and mashups. The potential to make faulty schemas is also a problem - as with a database, any error in organising the information, or in the data retrieval process will mean a complete inability to access data.

But the main problem I see is that these schemas are only really applicable to certain domains, such as librarianship, archiving, biomedics, statistics, and other similar fields. For the regular web user, there are no particular benefits to applying an RDF schema to, say, your Facebook page - but it would probably be useful to Mark Zuckerberg.

The semantic web is probably more useful in the organisation of information on the web, and harnessing it. But it would probably be largely invisible and irrelevant to the legions of casual web users out there.

Subscribe to:

Comments (Atom)