Introduction

With the advent of faster internet connections, online gaming has grown in popularity; likewise, the emergence of Web 2.0 has given birth to the world of social networking. But these two entities are not always entirely exclusive of one another. Along with Facebook have also grown a plethora of social network games, such as Farmville and Mob Wars, where participants can interact with one another as well as play the game itself.

Similar games such as IMVU and Second Life include the option for members to create custom content (CC), which adds an extra dimension of interactivity for participants, allowing them to trade, exchange, and even buy and sell custom creations via the internet. This essay will discuss the ways in which Web 2.0 and 3.0 have enabled users to create and exchange such content, as well as swap tutorials, discuss game information, and find an audience. Specifically the essay will look at The Sims (2000), an old game by Maxis (now a subsidiary of Electronic Arts) which, despite two newer sequels, still retains a large following. Many sites related to this game are now obsolete, motivating fans to preserve and archive custom content which can no longer be found elsewhere. Three points will be discussed: - 1) how gamers have used Web 2.0 and 3.0 tools to effectively create, archive and publish The Sims custom content on the web; 2) how they use current advances in ICTs to create ‘information networks’ with other fans and gamers and; 3) how the archiving of custom content has been digitally organised and represented on the web. Lastly, we will see how present technologies can be further used to enhance gamers' experiences in the future.

1. Creating and Publishing Custom Content (CC)

1.1. XML and the creation of custom content for The Sims

Objects in the game The Sims are stored in the IFF (Interchange File Format) file format, a standard file format which was developed by Electronic Arts in 1985 (Seebach, 2006). Over the years, gamers and fans have discovered ways to manipulate IFF files, and thus clone and customise original Sims objects. This has been done with the tacit endorsement of the game creator, Will Wright (Becker, 2001), and has been supported by the creation of various open-source authoring and editing tools such as The Sims Transmogrifier and Iff Pencil 2.

The Sims Transmogrifier enables users to ‘unzip’ IFF object files, exporting the sprites into BMP format, and converting the object’s metadata into XML format (see Fig. 1). XML (eXtensible Markup Language) is a standard ‘metalanguage’ format which “now plays an increasingly important role in the exchange of a wide variety of data on the web” (Chowdhury and Chowdhury, 2007, p.162). Evidence of its versatility is that it can be used to edit the properties of Sims objects, either manually or via a dedicated program. The game application then processes the data by reading the tags in context.

|

| Fig. 1 - An example of a Sims object's metadata expressed in XML. The tags can be edited to change various properties of the object, such as title, price and interactions. |

This highlights two points relating to the general use of XML:- a) it is versatile enough to be used to edit the properties of game assets and; b) it is accessible enough for gamers to effectively edit it in order to create new game assets.

1.2. Pay vs. Open-Source

The pay vs. open-source debate has not left The Sims world unscathed. In fact, it is a contentious issue that has divided the community for years. CC thrives on open-source software; indeed, cloning proprietary Maxis objects in order to create new ones would not be possible without free programs such as Transmogrifier. These tools have supported the growth of an entire creative community that modifies game content. However, there are software tools that are not open-source and which are often met with disapproval by gamers. Some CC archive sites, such as CTO Sims, have opted to make these programs available to its members for free, which has been controversial for some.

Even more contentious is the selling of CC, which has been made possible with the use of APIs (see Fig. 2). Many players feel that they should not have to pay for items which have, after all, merely been cloned from Maxis originals. Some creators choose to sit on the fence, putting Paypal donation buttons on their sites for those who wish to contribute to web-hosting costs. It seems that such a debate would not exist without the existence of online transaction web services like Paypal, which seem to have fed a controversial market in CC. On the other hand it is largely the open source 'movement' which has allowed creators to make their content in the first place, and so the latest web technologies have essentially fuelled both sides of a debate which looks set to continue.

Even more contentious is the selling of CC, which has been made possible with the use of APIs (see Fig. 2). Many players feel that they should not have to pay for items which have, after all, merely been cloned from Maxis originals. Some creators choose to sit on the fence, putting Paypal donation buttons on their sites for those who wish to contribute to web-hosting costs. It seems that such a debate would not exist without the existence of online transaction web services like Paypal, which seem to have fed a controversial market in CC. On the other hand it is largely the open source 'movement' which has allowed creators to make their content in the first place, and so the latest web technologies have essentially fuelled both sides of a debate which looks set to continue.

|

| Fig. 2 - A Paypal API added to the site Around the Sims. The donation button allows payments to be made directly through Payal's site. Registration is not necessary. |

1.3. Publishing CC on the Web

There are several methods through which creators publish their work. One is blogging. Fig 3 shows an example of a creator's blog, Olena's Clutter Factory, which showcases her CC and allows gamers to download them. The Clutter Factory is a very successful site – it not only provides high quality downloads, but also a level of interactivity not often seen with The Sims fan sites. Because it is hosted by Blogger, it allows fellow Google members to follow updates as new creations are added, post comments, and browse via tags.

|

| Fig 3. - A blog entry at Olena's Clutter Factory |

Another example of effective publishing is the author's website, Sims of the World, which is hosted by Google Sites (see Appendix 1). Google Sites allows the webmaster to use a variety of tools in order to publish data on the web. This includes the ability to create mashups using what Google calls 'gadgets'.

Google gadgets are essentially APIs, “miniature objects made by Google users … that offer … dynamic content that can be placed on any page on the web” (Google, 2011). Examples of Google gadgets are the Picasa Slideshow and the +1 facility. Sims of the World uses Picasa Slideshow to display the site owner's online portfolio to fellow gamers in a sidebar. Gamers are therefore able to view examples of the creator's work before choosing to download it. If they like what they see, they have the option to use the +1 button, which effectively recommends the site to other gamers by bumping up its rating on Google's search engine.

Google Sites also allows the addition of outside APIs. Sims of the World has made use of this facility by embedding the code from a site called Revolver Maps, which maps visitors to the site in real time. Not only can the webmaster view previous, static visits to the site, they can also view present visitors to the site via a 'beacon' on the map. This tool allows the ability to view visitor demographics and the popularity of the site. For a more in-depth view, the site's visits may be mapped via Google Analytics, which provides more detailed information.

Google itself is an excellent tool for web publishers as it accommodates editing whilst on the move. The Google Chrome browser now comes with a handy sync function, which stores account information in the 'cloud', and allows it to be accessed wherever the webmaster happens to be. Browser information such as passwords, bookmarks and history are stored remotely, which makes accessing the Google Sites manager quick and convenient, with all relevant data available immediately. This is an invaluable tool for webmasters, as well as for the regular internet surfer.

2. Social Networking and The Sims

"Participation requires a balance of give (creating content) and take (consuming content)."

(Rosenfeld and Morville, 2002, p.415).

The customisation of game assets from The Sims has opened up a huge online community which shares, exchanges and even sells CC. The kudos attached to the creation of CC means that creators often seek to actively display and showcase their work on personal websites, blogs and Yahoo groups. This has created a kind of social networking where creators exchange links to their websites, comment on one another’s works, and display their virtual portfolio. As Tomlin (2009, p.19) notes: “The currency of social

networks is your social capital, and some people clearly have more social

capital than others.”

How

then do creators promote their social capital?

Gamers have been quick to use social networking in order to showcase creations or keep the wider community informed. An example is the Saving the Sims Facebook page, which has a dedicated list of CC updates, as well as relevant community news. Other examples include Deestar's blog, Free Sims Finds, which also displays pictures of the latest custom content for The Sims, Sims 2 and Sims 3.

Forums have also become the lifeblood of The Sims gaming community and its “participation economy” (Morville and Rosenfeld, 2002). These include fora such as The Sims Resource, CTO Sims and Simblesse Oblige, which give gamers the opportunity to share hints, tips, and other game information as well as their own creations. It also offers the chance to create relationships with other gamers and fans. Themed challenges and contests are a popular past-time at these fora, with prizes and participation gifts given out regularly. Threads are categorised by subject, can be subscribed to, and at CTO can even be downloaded as PDFs for quick, offline reading.

Simblesse Oblige is a forum that specialises in gaming tutorials. Unlike webpage-based tutorials, Simblesse Oblige's tutorials have the advantage of being constantly updated by contributors (similar to the articles on Wikipedia). Forum members may make suggestions in the tutorial thread, add new information to the tutorials, or even query the author. Members are welcome to add their own tutorials (with the option of uploading explanatory images) to the forum and share their own knowledge with peers. This constantly changing, community-driven forum is a far cry from the largely one-dimensional, monolithic Usenet lists of Web 1.0.

3. Digital Organisation of The Sims CC

3.1. Archiving old CC

The Sims is an old game, and over the years many CC sites have disappeared. In recent years The Sims has seen a resurgence in popularity, and consequently there has been a move to rescue and preserve such content via sites such as CTO Sims, Saving the Sims, or Sims Cave. Unfortunately, this 'rescue mission' has been a slap-dash, knee-jerk reaction to the sudden disappearance of so much ephemera - items that no one thought they would need. Much has been done to save the contents of entire websites via the Internet Archive's Wayback Machine, for instance, but this cannot guarantee that any one website has been scraped in its entirety.

Sims collectors or archivists have resorted to community donations to help save what has been lost. This is a patchy process, as gamers generally do not collate custom content in an organised fashion, sometimes not knowing where their 'donations' come from or what exactly they consist of. Thus, archiving custom content is a time-consuming business which involves determining the nature of each file, labelling it, determining its properties and provenance and publishing it under the correct category (e.g. type of custom content, original creator/website etc.).

3.2. The Future of CC Archiving?

CTO Sims is a site - part archive, part forum - which attempts to rescue custom content from dead sites and publish it for the use of the community, categorising each item as closely to the original host site as possible. However, this is often impossible, as some sites are no longer accessible even via the Internet Archive. Since additions to the archive are made on a largely ad hoc basis (as and when donations are uploaded by the community), categorising is often inefficient, and duplications often occur, since there is no catalogue. The number of files is ever-expanding, creating an increasing need for a cataloguing or indexing system of some kind.

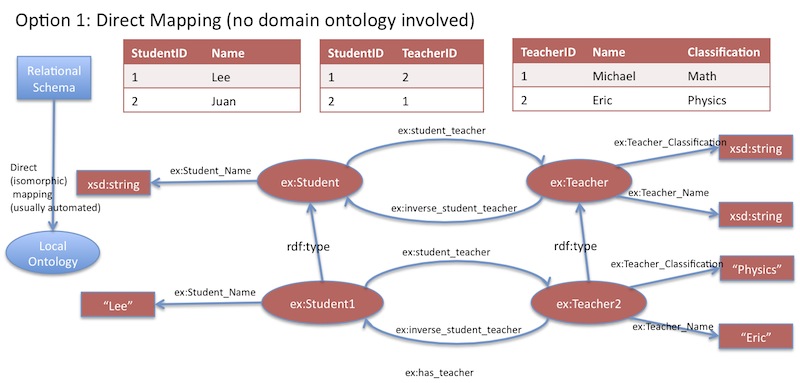

With the use of XML, RDF (Resource Description Framework) and other indexing tools, this may be the perfect time for such a system to be developed by the community. It is hoped that present and future technologies will aid in the further preservation of these niche ephemeral items, which display a unique side of online pop-culture. (See Appendix 2 for an example of a proposed RDF schema).

Blog URL:- http://digisqueeb.blogspot.com

References and Bibliography

Becker,

D., 2001. Newsmaker: The Secret Behind ‘The Sims’. CNET News,16 March 2001.

Available at: http://news.cnet.com/The-secret-behind-The-Sims/2008-1082_3-254218.html

[Accessed on 28 December 2011].

Brophy, P., 2007. The Library in the Twenty-First Century. 2nd ed. London: Facet.

Chowdhury, G. G. and Chowdhury, S.,

2007. Organizing Information: from the shelf to the web. London: Facet.

Feather, J. and Sturges, R. P. eds., 2003. International Encyclopedia of

Information and Library Science. 2nd ed. London: Routledge.

Google, 2011. Gadgets.

[online] Available at: http://www.google.com/webmasters/gadgets/

[Accessed on 28 December 2011].

Rosenfeld, L. and Morville, P.,

2002. Information Architecture for the World Wide Web. 2nd

ed. California: O’Reilly.

Seebach, P., 2006. Standards and specs:

The Interchange File Format (IFF). 13 June 2006. Available at: http://www.ibm.com/developerworks/power/library/pa-spec16/?ca=dgr-lnxw07IFF

[Accessed on 27 December 2011].

Sihvonen,

T., 2011. Players Unleashed! Modding The

Sims and the Culture of Gaming.

Amsterdam: Amsterdam University Press.

Tomlin,

I., 2009. Cloud Coffee House: The birth

of cloud social networking and death of the old world corporation. Cirencester: Management Books 2000.

Appendix 1

An example of a proposed RDF schema applied to CC for The Sims, composed by the author.

This picture shows a skin for The Sims. The skin file (.skn, .cmx and .bmp) would be the subject. ‘The Seamstress’ would be the object. The predicate would be ‘has title’ (http://purl.org/dc/elements/1.1/title).

Here are examples of other RDF triples that can be applied to this item:-

Subject: this item

Object: skin file

Predicate: Type (http://purl.org/dc/elements/1.1/type)

Subject: this item

Object: Ludi_Ling

Predicate: Creator (http://purl.org/dc/elements/1.1/creator)

Subject: this item

Object: 11/08/2011

Predicate: Date (http://purl.org/dc/elements/1.1/date)

Subject: this item

Object: birthday present for JM

Predicate: Description (http://purl.org/dc/elements/1.1/description)

Subject: this item

Object: rar.

Predicate: Format (http://purl.org/dc/elements/1.1/format)

Subject: this item

Object: Member Creations/Skins

Predicate: Category